Robots Exclusion Checker 1.1.8.1 CRX for Chrome

A Free Developer Tools Extension

Published By https://www.samgipson.com

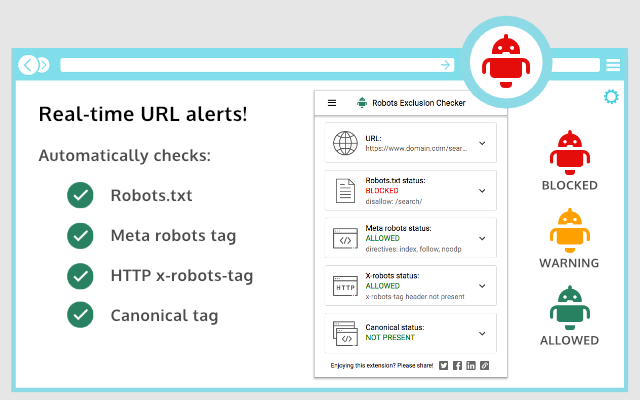

Robots Exclusion Checker (lnadekhdikcpjfnlhnbingbkhkfkddkl): Live URL checks against robots.txt, meta robots, x-robots-tag & canonical tags. Simple Red, Amber & Green status. An SEO Extension.... Read More > or Download Now >

Robots Exclusion Checker for Chrome

Tech Specs

- • Type: Browser Extension

- • Latest Version: 1.1.8.1

- • Price: Freeware

- • Offline: No

- • Developer: https://www.samgipson.com

User Reviews

- • Rating Average

- 5 out of 5

- • Rating Users

- 27

Download Count

- • Total Downloads

- 165

- • Current Version Downloads

- 1

- • Updated: May 11, 2022

Robots Exclusion Checker is a free Developer Tools Extension for Chrome. You could download the latest version crx file or old version crx files and install it.

More About Robots Exclusion Checker

The extension reports on 4 elements:

1. Robots.txt

2. Meta Robots in the HTML

3. X-robots-tag in the HTTP header

4. Rel=Canonical in the HTML and HTTP header

Robots.txt

If a URL you are visiting is being affected by an "Allow” or “Disallow” within robots.txt, the extension will show you the specific rule within the extension, making it easy to copy or visit the live robots.txt. You will also be shown the full robots.txt with the specific rule highlighted (if applicable). Cool eh!

Meta Robots Tag

Any Robots Meta tags that direct robots to “index", “noindex", “follow" or “nofollow" will flag the appropriate Red, Amber or Green icons. Directives that won’t affect Search Engine indexation, such as “nosnippet” or “noodp” will be shown but won’t be factored into the alerts. The extension makes it easy to view all directives, along with showing you any HTML meta robots tags in full that appear in the source code.

X-robots-tag

Spotting any robots directives in the HTTP header has been a bit of a pain in the past but no longer with this extension. Any specific exclusions will be made very visible, as well as the full HTTP Header - with the specific exclusions highlighted too!

Canonical Tags

Although the canonical tag doesn’t directly impact indexation, it can still impact how your URLs behave within SERPS (Search Engine Results Pages). If the page you are viewing is Allowed to bots but a Canonical mismatch has been detected (the current URL is different to the Canonical URL) then the extension will flag an Amber icon. Canonical information is collected on every page for your convenience.

Within Settings you can choose between the following user-agents:

1. Googlebot

2. Googlebot news

3. Bing

4. Yahoo

This tool will be useful for anyone working in Search Engine Optimisation (SEO) or digital marketing, as it gives a clear visual indication if the page is being blocked by robots.txt (many existing extensions don’t flag this). Crawl or indexation issues have a direct bearing on how well your website performs in organic results, so this extension should be part of your SEO developer toolkit for Google Chrome. An alternative to some of the common robots.txt testers available online.

This extension is useful for:

- Faceted navigation review and optimisation (useful to see the robot control behind complex / stacked facets)

- Detecting crawl or indexation issues

- General SEO review and auditing within your browser

CHANGELOG:

1.0.2: Fixed a bug preventing meta robots from updating after a URL update.

1.0.3: Various bug fixes, including better handling of URLs with encoded characters. Robots.txt expansion feature to allow the viewing of extra-long rules. Now JavaScript history.pushState() compatible.

1.0.4: Various upgrades. Canonical tag detection added (HTML and HTTP Header) with Amber icon alerts. Robots.txt is now shown in full, with the appropriate rule highlighted. X-robots-tag now highlighted within full HTTP header information. Various UX improvements, such as "Copy to Clipboard” and “View Source” links. Social share icons added.

1.0.5: Forces a background HTTP header call when the extension detects a URL change but no new HTTP header info - mainly for sites heavily dependant on JavaScript.

1.0.6: Fixed an issue with the hash part of the URL when doing a canonical check.

1.0.7: Forces a background body response call in addition to HTTP headers, to ensure a non-cached view of the URL for JavaScript heavy sites.

Found a bug or want to make a suggestion? Please email extensions@samgipson.com